Deep Learning and Neural Networks in Automation

1.0 Overview

With the need to manage building resources, Building Automation and systems integration has become the norm in large industrial buildings, campuses, skyrises and it is slowly creeping into the consumer housing market. The technology of connecting edge devices to systems and then systems to the cloud has advanced dramatically and now the need to manage and analyse data from hundreds or thousands of devices within many networks is further pushing innovation in the industry. Data is often referred to as the oil of the 21st century, in which case, Advanced Data Processing/Machine Learning/AI can be considered the refineries of the 21st century.

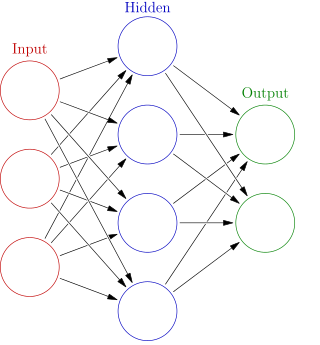

Today, we want to look at Deep Learning, a subset of machine learning, and Neural Networks in the Automation industry. Deep learning is a method of processing data and creating patterns to make decisions that imitates a living brain. The theory is that with deep learning, a computer is able to train itself to process and learn from data. Neural Networks are a set of algorithms designed to recognize patterns in a way that mimics the neural networks of human/animal brains. Deep learning uses Neural Networks stacking them up on top of each other to make much more complex entities to produce an output for a given input. Thus, the relationship between Deep Learning and Neural Networks is that Neural Networks is the structure that deep learning uses to make its decisions. Without Neural Networks there would be no deep learning.

The functional difference between Neural Networks and Deep Learning is that Neural Networks need to be trained while Deep Learning can extend its training by itself, it is capable of self-training and learning. From a practical stand point, Neural Networks are provided as a tool in some Automation PLC's and Scada, VFD and other controllers whereas if you wanted to employ Deep Learning, you would need an Amazon Size computer to make it work. In other words, Deep Learning is not available as an automation technology... Yet.

On the other hand, Neural Networks are employed in the automation industry. For the rest of the article, we will look at Neural Networks in the automation Industry.

What problem do Neural networks solve?

Imagine a technology existed that said to you – 'Don't worry about guessing/analyzing all the things you don't know, let me make the decision for you, when you are not sure. I can assure you when I make decisions for you I will either do what you trained me to do (what you could anticipate) OR I will make the least wrong decision when I make a decision based on a set of inputs you did not anticipate."

Sounds pretty useful doesn't it? We all know the infamous Donald Rumsfeld quote. In essence he said there are things we don't know and worse, there are things we don't know we don't know. Thus having a system that can cope with the unknowns and produce a good output seems like an extremely useful tool. It is.

Sometimes a system will be presented with a set of inputs that are unanticipated – What should the system do to the outputs ? We all know how to program if-then-else statements and we all know its those pesky else's that are so hard to anticipate or know how to handle in even slightly complex systems. Often we leave out the else statement because we just don't know what to do. Analytical thinking cannot solve all problems.

How do Neural Networks work?

To explain how they work we need to draw a whole number of block diagrams and truth tables. Then you need to apply your mind in a very concentrated way and follow the detail of the logic. We will leave this for later. We don't want you to stop reading this article yet. If you want a simple idea read the section of this article called Truth Tables.

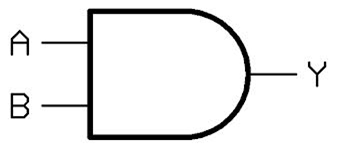

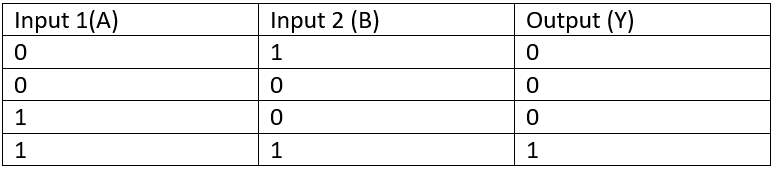

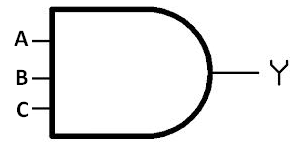

Imagine a series of Boolean logic gates connected in a system – AND, NOT, OR, XOR etc. We will call this system a Artificial Neuron. Training the Neuron means building a truth partial truth table. For a given set of inputs you teach it what output you require. Then you train it with a different set of inputs and teach it what output you like. You are starting to teach the Neuron what its truth table should be. What you do not do, is train it for every possible set of inputs.

An artificial neural network is an interconnected group of nodes, akin to

the vast network of neurons in a brain. Here, each circular node represents an artificial neuron and

an arrow represents a connection from the output of one neuron to the input of another.

Now, if you design the Artificial Neuron well, when it is presented with something you did not train it to recognize, it will set the output to the least wrong output or expressed another way to the output it thinks is best for the partial match on the inputs.

What is wrong with Neural Networks

Aside for all the hype that surrounds them...

(1) They are not adaptive

This is a very serious flaw. AI people call them adaptive but they are not. They are trained and dumb.

When you see, your eye presents a pattern to your brain's vision processing centers (There are 14 of them). When you eye recognizes a face – say the person you are talking to – it recognizes the face no matter that the pattern landing on your eye changes constantly – your eye moves, the person moves, your focus changes … the pattern landing on your optical sensor cells changes all the time but your vision processing center presents a constant output – 'face'. Your brains neurons seems infinitely adaptive to the actual input pattern presented to them.

With a Neural network, all you have to do to make it fail is shift the input pattern presented by just one bit and the network will not work. Said another way, In an artificial neural network, you could train it to recognize 'face' but if the face moved by just one pixel to the left the network would fail so badly it would be a joke.

The designers and theorists of neural networks argue that they could build in more complexity to handle the shifted input pattern. What about when the face is partially obscured, out of focus, is 10 years older than the trained pattern... Oh we will just keep adding complexity and with more and more processing power the network could eventually solve the problem. We call BS.

(2)The least wrong output can still be wrong

If the least wrong output can be wrong on needs to be concerned about human safety and equipment protection. Normally they are applied in very limited contexts – ie to provide control under very average operating conditions. The outputs from neural Networks should not be applied at extreme ranges of the normal operating condition or in situations where tiny changes in output can produce huge multiplier effects.

Since a neural network is essentially a black box you don't know how it arrived at its answer. For example. If you designed and trained a neural network to recognize a smiley face in a photo then you have no idea if the network has simply remembered the training images that had smiley faces or whether it actually recognizes the smiley face. Read more here.

(3) They do not learn

They cannot train themselves. Networks with feedback loops from the outputs to the inputs improve the ability of the network to make better decisions in picking the least wrong output but they do not learn. Training is a deliberate activity and a network is in a training OR operating state.

(4) They are nothing like how a human brain works – not even a distant approximation

Human brains can learn. They are adaptive. Their design is completely different. Human neurons typically have more feedback connections than feed forward connections indicating that the human algorithm is far more of a feedback system than feed forward. Artificial networks generally only have the feedback system active during training. Human brains work by memorizing the invariant form. Ie they can abstract structure from a pattern. Artificial networks cannot.

If you hear someone sing "happy birthday to you.." you recognize the melody irrespective of what key it is sung in. Your brain has stored the invariant form. The change of frequency and interval from one note to another. Your brain takes the invariant memory and combines (feedback) it with the actual input to predict the actual next note you will hear. If you could design an artificial neural network to recognize the song you would have to teach it the song in all keys.

Truth Tables

Example for an AND Boolean logic gate

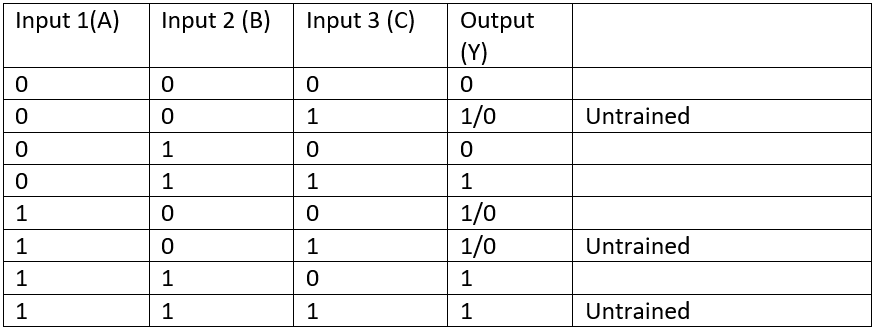

Example of a (very simple) Artificial Neuron Truth Table

The Neuron is well designed if it applies an appropriate output for the untrained scenarios by measuring the recognizing which trained input pattern is closest to the untrained input pattern being presented and choosing the output from this closest relative.

Example: Imagine the input pattern being presented is 0,,0,1. Look in the table. Its untrained so the Neuron has to decide which is the best output. It looks through the table to see the closest pattern. It finds 0,0,0 (pretty close) 1,0,0 (Pretty close) – both have 2 zeroes and a single 1. Now depending on how the Neuron is designed it will pick one of those patterns as the closest and select the corresponding output.

Read about deep learning here

The change criteria are based on the type of subscription

Wired - NEW THEORY CRACKS OPEN THE BLACK BOX OF DEEP NEURAL NETWORKS